Company: Elphel Inc., SCI Institute

Project:

Long-range 3D perception is an essential capability for autonomous navigation. The additional use of Long Wave Infrared (LWIR) cameras, compared to other systems, enable environment awareness in degraded visual conditions, such as fog, dust or low illumination. By leveraging a multi-sensor approach and the use of deep learning, we aim to reduce low contrast and low resolution often associated with thermal imaging, making it relevant for 3D sensing.

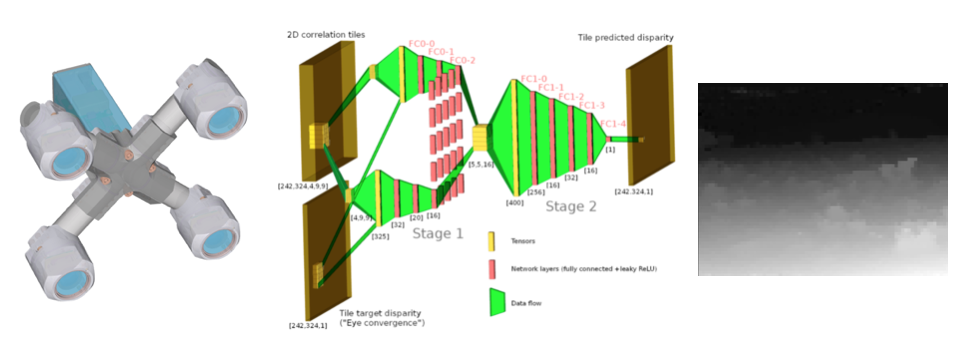

We built multi-input single-output deep neural networks, enabling long-range 3D perception from stereo thermal camera systems. Following some image pre-processing stage in the frequency domain, our network leverages multiple images as inputs (multi-layer correlation maps and target disparity map - "eye convergence") to predict a final disparity map (or distance map). We assessed different network configurations, as well as the impact of the number of sensors and correlation maps on metrics of interest.

The combination of multiple sensors and AI-based analysis enabled accuracy improvement compared to simple stereo-vision and traditional image analysis methods.